During the summer I worked at a startup, based around the concept of screen sharing with zero-configuration on one end. That means the one viewing the screen cast doesn’t need an application or plugin, they just need a browser on a PC or smart device.

This is useful for customer service agents who want to quickly show their clients how to do things instead of describing to them “click on account, click on advanced, click on edit.” Or for the salesman who wants to go over contract stipulations or edit a form with a client on the phone. Or for someone showing their grandmother how you change screen resolutions or font size.

Shaky foundations

The proof of concept server, written by one of the founders, was written in Javascript using Node.js to forward socket.io data carrying messages and image data across the network. Node.js and Javascript are fine for quick functionality as such, but you inherit alot of Javascript’s problems. It’s very single threaded, and variable assignment is shaky at best. F.eg. did I assign the value, or a reference? Will this function variable work as I expect in another context? Where should I bind ‘this’?

The merit of using Node.js in this project is that it uses Javascript on the client sides anyway, so the solution would consistently be using one language. And Node.js has alot of code-availability.

The downsides are alot of inconsistent behaviours due to side-effects of having global variables, that come in with 1st party and 3rd party code, and it’s just not scalable without using tricks and hacks to either make sure you launch an instance for each session somewhere and have a proxy that makes sure both ends consistently contact the same instance, or have a predefined pool of instances that the load balancer would need to consistently send clients to.

Erlang to the rescue

Rather than doing all that I decided to use Erlang, which I’m familiar with, having used it while working on Telecom systems in years past.

What you get with Erlang and OTP are a lot of tools for solving the issues you’d eventually run into using Node.js. Things like scalability over many nodes using message passing, lightweight processes, and a reasonably fast replicated directory for things like sessions from the Mnesia database.

Giddy-up, Cowboy

What I then needed was a HTTP framework, and that’s where Cowboy comes in. Cowboy’s OTP-derived framework of using handlers for tasks, by design, gives you concurrency, as an Erlang process is launched for each connection made to the server which then calls my code. The OTP model also gives you state which is passed on to handler functions via the main loop of the gen_server-esque handlers Cowboy expects us to provide.

WebSockets are becoming widespread technology

Since something like 86% of browser traffic is from browsers supporting WebSockets, and 96% of browsers in our native Iceland, I decided to design for raw WebSockets rather than rely on frameworks such as socket.io, sockjs or bullet. For one these frameworks don’t work consistently across the different transports. They add processing overhead. And they, in the case of socket.io, work by upgrading the connection. Giving you the poorest possible connection initially and eventually upgrading to WebSocket which 86% already support. Since Service Agents will be connecting via Chrome extension, you can expect them to have working WebSockets.

The rest get REST

And so, I decided to design for WebSockets first and support the remainder of clients with simple REST functionality, where images get buffered and replaced.

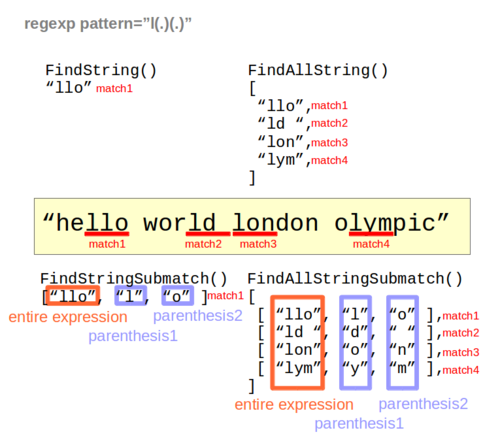

Most developers using WebSockets rely on JSON or arrayBuffers for carrying objects safely across the wire. And while JSON may work fine in Javascript, being that it’s almost identical to Javascript objects already, and the decoding and stringifying of objects to JSON and vice versa has been heavily optimized, I didn’t really need all that much JSON handling in the server code. I mostly needed to know the type of message coming in so I’d know what to do with it.

For this I implemented a custom protocol where all messages transported client-to-server begin with a header that has the letters cw, then 8bits for the length of an identifier, then the identifier itself and after that comes the stringified JSON.

If the message is intended for the server to handle, I decode the JSON using jiffy’s return_maps decoder and work on it from there.

The OTP-esque model of Cowboy’s handlers

When I receive a plaintext message via WebSockets in my Cowboy handler I get a call to websocket_handle({text, Data}, Req, State). What I do is parse that message through pattern matching and then send it back to me as a tuple of {identifier, Payload}. It then lands at websocket_info({identifier, Payload}, Req, State). There I use helper functions to determine where to route the message. If it’s intended for the agent, I check our State to see if I’m the Agent. If I am in fact the Agent, I return {reply, {text, Payload}, Req, State} which sends the message over to the Agent via his websocket. If I’m the Viewer according to the state, I check if my state has a counterpart registered (which would be the Agent) and send it to him. If I don’t have a counterpart, either because the connection is fresh or the counterpart has instructed us to delete them because they’re going down, I look in our session directory to find the right counterpart. That counterpart will then receive the exact same message, and since he is the Agent, he returns as previously mentioned.

Mnesia doesn’t forget

Our session directory, like I mentioned earlier, is based on mnesia. Using ram_copies only and replicating by using mnesia’s extra_nodes functionality. I don’t expect to do frequent lookups per session in our directory, but I expect the lookup to be fast. I also don’t care about saving sessions to disk if one or more nodes die.

But what about the REST fallback? To implement that I designed a simple gen_server that receives the same messages as a websocket handler would receive, essentially imitating it. The gen_server registers as a Viewer, updates his state when he receives new frames and returns those to the Viewer when he requests them via our REST service.

Transparent message passing

Message passing in Erlang is transparent, and I don’t need to worry whether the Pid I’m sending to is on the local node or remote, and so I gain clustering capabilities by using nginx as a proxying load balancer forwarding requests to nodes on its private network. Therefore it’s common for the sessions to have Agent connected to one node, while the Viewer is connected to another. And given multiple cores or processors on each node we can reasonably expect each end to have separate scheduling and CPU thread. And all that adds up to low latency communications between Agents and Viewers, where one session’s load shouldn’t negatively affect other sessions, unless there’s a catastrophe where we run out of resources, in which case we need more nodes.

Since the REST assistant gen_server can be contacted by any node, and is registered as a viewer in the session directory, and since websocket connections are persistent I don’t need to worry about where the loadbalancer routes the browser requests. I can rely on Erlang’s message passing in the REST case, and on the connection persistence of TCP for the websocket case.

Play on everyone’s strengths

Since other languages such as C are better suited for things like SSL decryption/encryption I let the loadbalancers handle terminating HTTPS connections.

Though, mind you, had one the hardware capability and inclination, it’s quite simple to implement HTTPS using Cowboy, and even SSL connectivity between individual nodes using inet_tls and a simple cert/key .pem file to have complete end-to-end encryption. And I’ve done that in staging. However I decided not to focus on that capability, and let Erlang do what Erlang is good at and let nginx do what it’s good at.

Final words

So, using Erlang to solve a concurrency problem worked well for me again. Some might say too well. Because after sorting out the server and changing the client to add features such as fullscreen capability and such, my services were no longer desired, as they already had a product, I had no vesting, and with just a week’s worth of notice period I was expendable.

The server, though, still chugs along. Code documented well enough for others. And karma works, not by any supernatural means, but rather by the age-old manner of word-of-mouth, which has a tendency to bite people in the ass, when it comes to hiring talent.

Oh well, I’m on to bigger and better projects anyway. More on that at another time!

If you have questions or suggestions, don’t hesitate, I’m @henrythor